LoRA

Low-Rank Adaptation — efficient fine-tuning without retraining billions of parameters.

Fine-Tuning for the Rest of Us

Full fine-tuning of a 70B parameter model requires massive GPU clusters and costs tens of thousands of dollars. LoRA makes fine-tuning accessible: freeze the original weights and add tiny trainable matrices alongside them. You get specialized performance at a fraction of the cost.

This is how companies adapt foundation models for legal, medical, financial, and other specialized domains without starting from scratch.

General models struggle with specialized domains. Full fine-tuning is prohibitively expensive.

Freeze original weights W. Add two small matrices A and B. Output = Wx + A(Bx). Only train A and B.

If W is 4096x4096 (16M params), A might be 4096x8 and B 8x4096 (65K params) — 99.6% reduction!

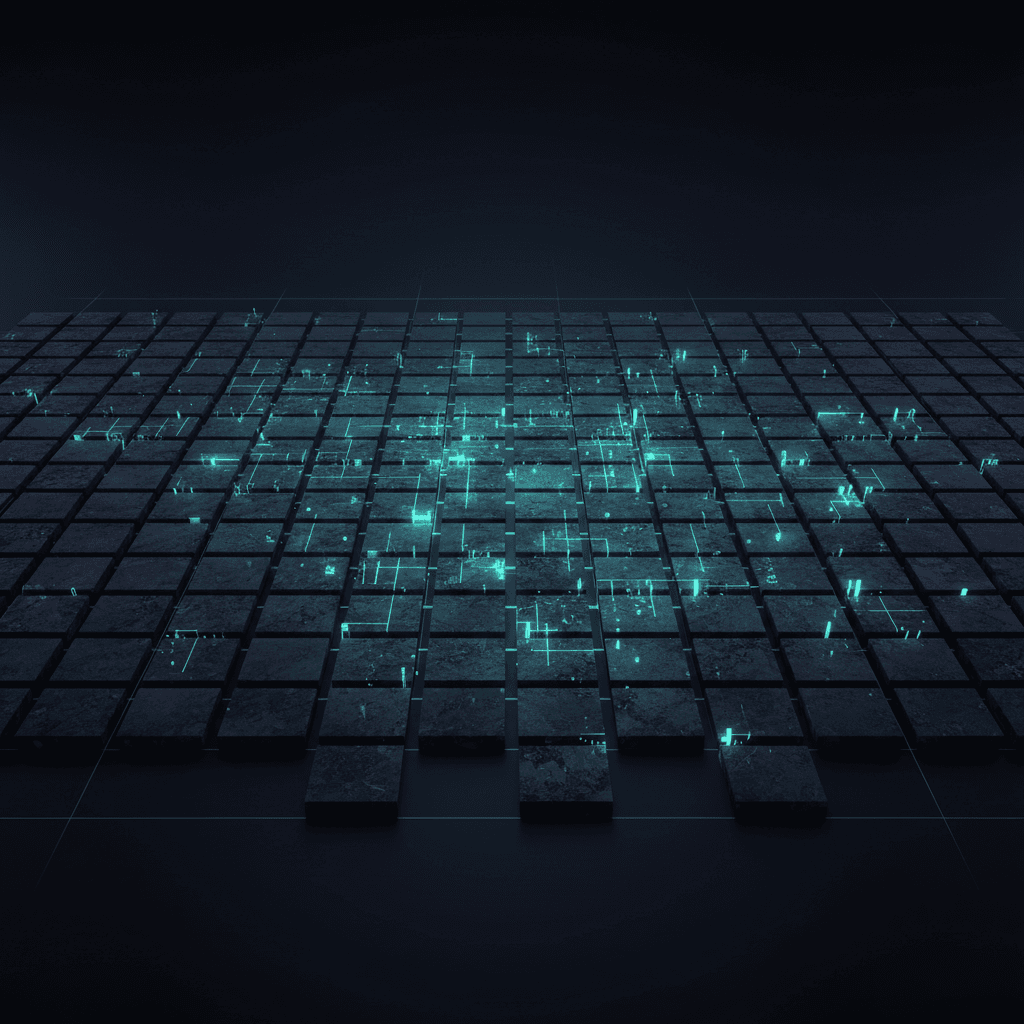

Frozen vs. Trainable Parameters

Each square represents model parameters. Gray = frozen original weights. Glowing = LoRA's trainable matrices:

108 frozen parameters (gray) · 12 LoRA trainable (10.0%)

In practice, LoRA typically trains only 0.1-1% of total parameters

How LoRA Works

LoRA in the Wild

Thousands of LoRA adapters on CivitAI for specific art styles, characters, and concepts. Swap instantly.

Companies fine-tune Llama/Mistral with LoRA for domain-specific tasks at a fraction of full fine-tuning cost.

Combines LoRA with 4-bit quantization. Fine-tune a 65B model on a single GPU. Democratized AI adaptation.

Test Your Understanding

Q1.What does LoRA stand for?

Q2.What happens to the original model weights during LoRA fine-tuning?

Q3.What is the main advantage of LoRA over full fine-tuning?

Q4.What is a practical benefit of LoRA adapters being separate files?